Facebook today announced that three independent studies have found that the company's efforts to fight the spread of false news on its site might be working.

The three studies -- conducted respectively by New York University and Stanford University researchers, the University of Michigan, and French fact-checking organization Les Décodeurs -- each found that the volume of false news on Facebook has decreased. Some found that, amongthe false news content present on the site, engagement with it had also gone down.

We recently ran a survey to see if users were noticing less spam on the social network, and despite today's announcement, it seems like misinformation might not be totally eradicated just yet.

Here's what each study found, and how it compares to what users report seeing on their News Feeds.

Three Studies of Facebook's Fight Against False News

"Trends in the Diffusion of Misinformation on Social Media"

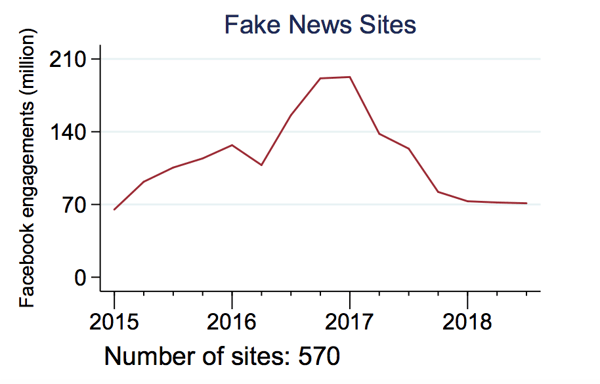

The first study -- conducted by New York University's Hunt Allcott, along with Stanford University's Matthew Gentzkow, and Chuan Yu -- observed the amount of engagement on Facebook and Twitter with content from 570 publishers that had been labeled as "false news," according to earlier studies and reports. And while the study cites where it obtained this list of 570 sites, it doesn't actually indicate what they are.

The team them used content sharing and tracking platform BuzzSumo to measure how much engagement -- shares, comments, and such reactions as Likes -- was received by all stories published by these sites between January 2015 and July 2018 on Facebook and Twitter.

The results: Following November 2016, interactions with this content fell by over 50% on Facebook. The study also indicated, however that shares of this content on Twitter increased.

Source: Alcott, Gentzkow and Yu

It's important to note that a U.S. presidential election took place in November 2016, for which Facebook was weaponized by foreign actors in a misinformation campaign with the intention of influencing the election's outcome.

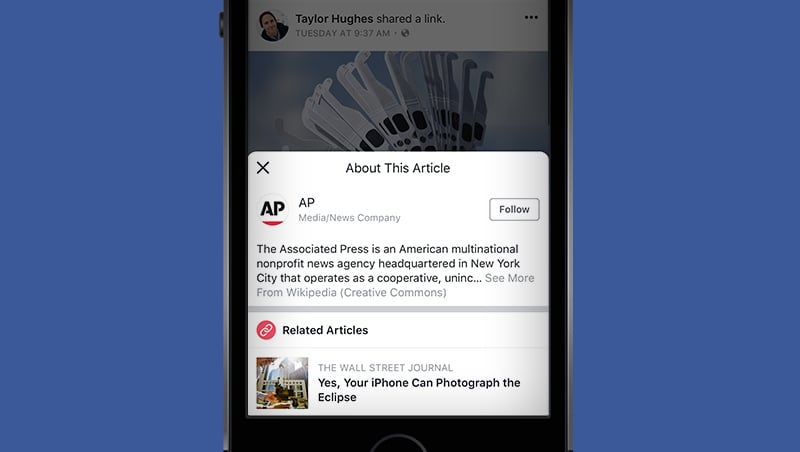

Since then, Facebook has widely publicized its fight against the spread of such misinformation -- which includes false news -- and points to this study as evidence of that fight's success.

"Iffy Quotient: A Platform Health Metric for Misinformation"

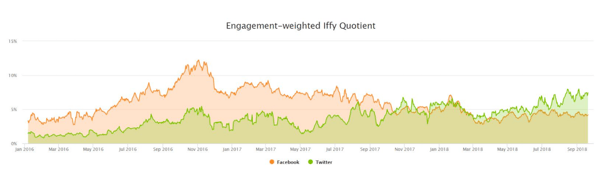

The second study, conducted by researchers at the University of Michigan, relied a measure of false news engagement referred to as the "Iffy Quotient" -- which takes into account how much content from sites known for publishing misinformation is "amplified" on social media.

Why such a non-committal word, like "iffy"? According to the study, the name is a tribute to the often mixed, subjective definitions of what constitutes "false news." In this case, it includes "sites that have frequently published misinformation and hoaxes in the past," as measured by such fact-checking bodies as Media Bias/Fact Check and Open Sources.

This study largely utilized NewsWhip: a site that measures the most popular links shared on social, as well as the engagement -- again, shares, comments, and such reactions as Likes -- received by each link.

The researchers then isolated the links from NewsWhip that were classified as "iffy," examining how much engagement they received over time, between January 2016 and September 2018.

Source: University of Michigan

The results, according to the study's authors, aligned with those of the first study, showing "a long-term decline in Facebook’s Iffy Quotient since March 2017."

"False Information Circulates Less and Less on Facebook"

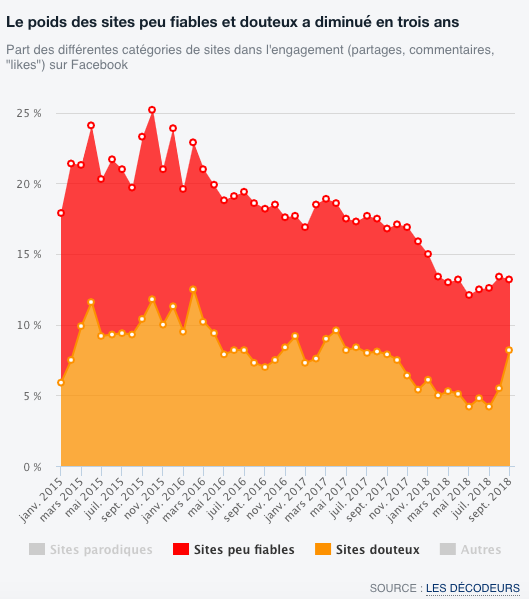

Finally, a study conducted by Les Décodeurs -- a fact-checking division of French newspaper Le Monde -- concluded that Facebook engagement with content from publishers classified as “unreliable or dubious sites” has decreased by half within France since 2015.

Source: Les Décodeurs. Translation: "The weight of unreliable and doubtful sites has decreased in three years. Share of different categories of sites in the commitment (shares, comments, "likes") on Facebook. "Sites peu fiables" = "unreliable websites." "Sites douteux" = "doubtful websites.

What Do Users Report Seeing on Facebook?

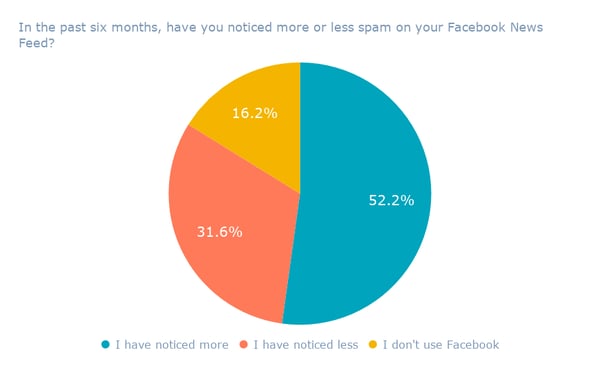

While the above three studies point to the possible success of Facebook's efforts to curb the spread of false news and misinformation, the group of users we surveyed might not yet be seeing the impact of Facebook’s anti-spam measures.

We asked 831 internet users across the U.S., UK, and Canada: In the past six months, have you noticed more or less spam on your Facebook News Feed?

Over half of respondents report seeing more spam in their News Feeds over the past six months: a figure up from the 47% who reported seeing more spam in their feeds in July 2018, when we ran a preliminary survey.

During that same time, we ran another survey in which over 78% of respondents indicated that they would include "fake news" in spam content.

These combined findings raise a question: If independent research, which Facebook says it did not fund, points to such success in its efforts to curb the spread of false news, why does a growing number of users report seeing more of it in the News Feed?

There could be a number of explanations, one being heightened awareness. Since first discovering that it was weaponized for a coordinated misinformation campaign leading up to the 2016 U.S. presidential election, Facebook has been more forthcoming about further evidence it finds of bad actors misusing its site for similar purposes.

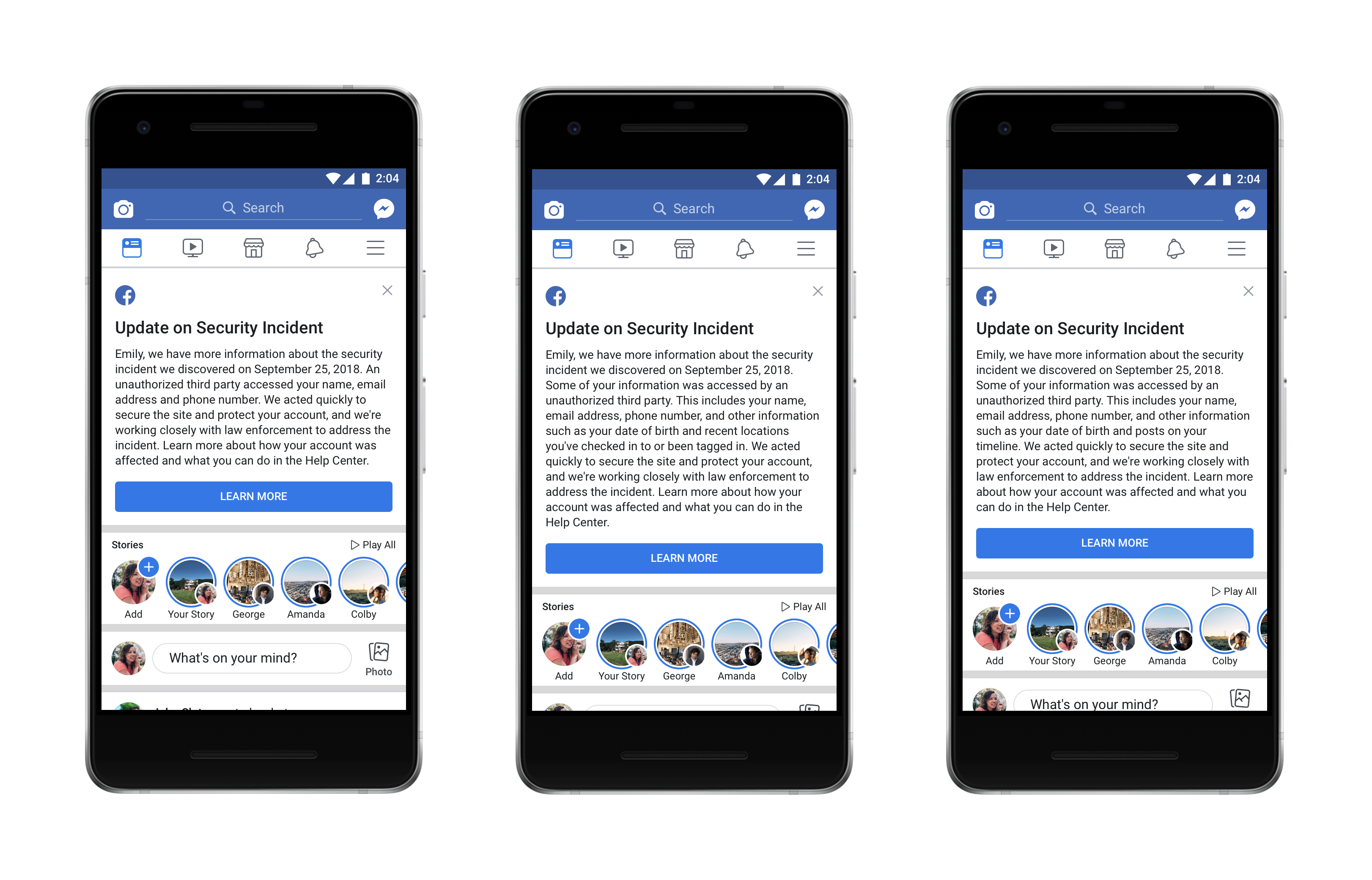

In fact, the Wall Street Journal reported today that -- according to its sources -- the bad actors behind a September data attack that scraped the personal information of 30 million Facebook users were "spammers that present[ed] themselves as a digital marketing company ... looking to make money through deceptive advertising."

With such stories continuing to make headlines, it could be that Facebook users are more attuned and sensitive to the possible misleading or spammy nature of the content they see in their News Feeds, causing them to report seeing more misinformation.

That sensitivity could be compounded by the looming days remaining before the 2018 midterm elections in the U.S., where the highest percentage of respondents in our survey reported seeing more spam in their news feeds.

-1.png?width=600&name=Responses%20by%20Region%20(2)-1.png)

The imminent timing of such a pivotal event could also heighten user awareness, as the topic of the midterm elections continues to dominate headlines, national dialogue, and televised ads. Consider, too, that our research also shows that about a third of internet users don't believe that Facebook's efforts to prevent election meddling will work at all.

But as Facebook's various efforts -- or, at the very least, the attention the company strives to draw to them -- continue, so will our measuring of user sentiment be ongoing. Stay tuned.

![Looking Back on Facebook's Murky 2018: Here's Why Users Are Sticking Around [New Data]](https://blog.hubspot.com/hubfs/facebook-2018-user-motivation.jpg)

![Is Gamification the Key to Better Video Engagement? [New Data]](https://blog.hubspot.com/hubfs/video-gamification-data.jpg)

![The What, Where, and How of Video Consumption [New Data]](https://blog.hubspot.com/hubfs/What%20do%20you%20use%20Facebook%20for_%20%281%29.png)

![60% of People Are Sticking With Facebook, Even After the Latest Data Breach [New Data]](https://blog.hubspot.com/hubfs/facebook-data-breach-no-one-deleting.jpg)