There's nothing quite like an anniversary to shift your perspective on things.

It certain forces us to look at things in retrospect -- where we were then, and where we are now, however many years later. And to commemorate its 20th anniversary, that's what Google did today with an event in San Francisco.

There was a celebration, of course -- but it was less passed hors d'oeuvres, and more, "Here's where we're going next."

Here are some of the most important announcements made today.

1. Changes to Visual Results

A New (Bigger) Home for AMP Stories

You might be familiar with the AMP (Accelerated Mobile Pages) Project: Google's initiative to help and encourage publishers to create pages that both load fast and come with an overall positive user experience.

Earlier this year, Google introduced the AMP Story: fast-loading content that's almost completely visual, and very immersive.

If you've never seen AMP stories, that's okay -- Google announced today that they'll be more discoverable, by way of listing them in both Google Image search results, as well as within Google Discover (formerly Google Feed -- more on that later).

Featured Videos

Google also announced today that it's using what sounds like machine learning to help users discover the most helpful video content for their search queries.

Using the example of visiting a national park for the first time, the company explains that it now understands a video's content well enough that it's able to show you the videos that are the most applicable to your situation -- for example, the most important landmarks to see within that park.

Image credit: Google

More Context for Image Search Results

For some of us, a Google Image search is a favorite past time (just saying). Starting this week, the company said at today's event, Image search results will now have more context -- like captions that clearly indicate where the image is published, and more suggested, similar search terms at the top of the page.

Image credit: Google

Some of these changes had already been introduced on mobile -- now, they'll take effect on desktop.

Google Lens for Image Search Results

Google introduced Lens -- an artificial-intelligence (AI)-powered technology that helps answer people's questions about the photos they take, like figuring out the breed of a dog -- in May at its annual I/O developer conference.

Now, Lens will be available in Image search results to help provide even further context. It seems like the most applicable use case here will be for shopping . -- for example, if you view an image that has several objects within it, Lens can analyze (and usually identify) each of those objects.

Users can then select one of those given objects, after which Google will float links to places where they can be purchased.

Image credit: Google

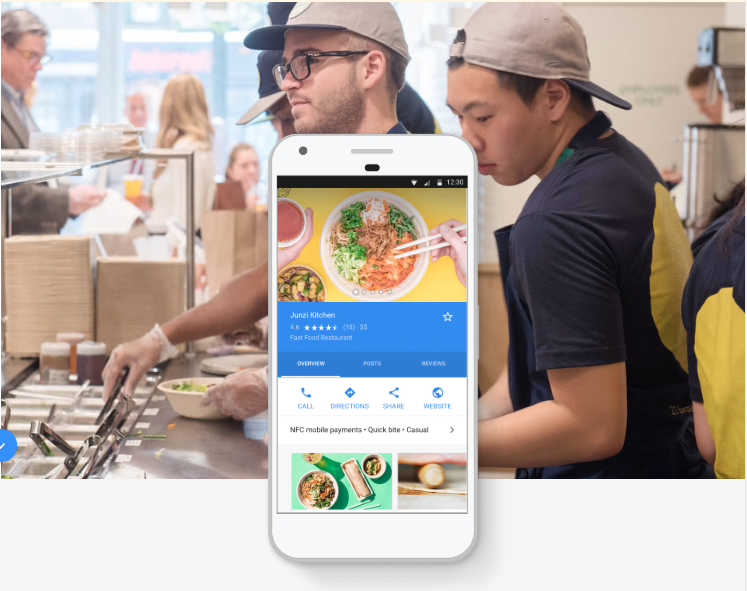

2. Google Feed Is Now Discover

Google first rolled out the Feed feature within its app for the same reason that many other networks and platforms have a feed: To help users see what's new and discover content.

Google announced today that it's leaning into that second purpose: discovery. Hence, Google Feed has a new name: Discover.

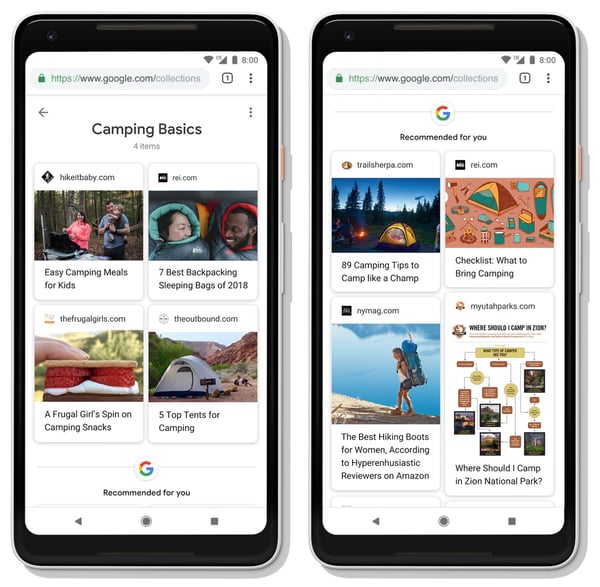

But it also has a new look, according to the company's official statement -- again, to provide more context. Within Discover, the content featured will have a topic header to let the user know why she's seeing it. And, if the user wants to explore that topic further, it'll be a native and seamless process.

Image credit: Google

Discover will also be more personalized. In addition to allowing users more customization options for the content featured in the Discover tab, in multiple languages, it will no longer take the age of the content into account -- as long as it's relevant.

For example, if I'm visiting Honolulu for the first time and want to search for vegan restaurants there, Discover might show me content around that query that isn't necessarily new, but that I personally have not seen before -- and still answers my question.

Image credit: Google

Don't have the Google app? No problem. Discover will also be rolling out on the Google.com homepage across all mobile browsers. What isn't quite so clear is whether or not users have to be signed into Google to use Discover properly -- but given the emphasis on personalization, it's likely that they do.

3. Picking Up Where You Left Off With Activity Cards

I'm not sure about you, but when I'm researching a story, I have a very bad habit of finding a helpful resource, reading it, and abandoning it.

That is, until I need to visit that resource again for a follow-up story, leading to an all-to-often futile search for a page I've already visited.

Fortunately, Google has introduced activity cards to help forgetful, in-a-rush researchers like me "retrace your steps." Think of these cards as visual representations of your Search history, except instead of having to go sifting through it, pages you've previously visited while researching the same topic will appear in Search results.

Image credit: Google

An additional new feature to help users hang onto helpful resources is Collections, which allows you to add a page from your activity cards to a collection of content pieces on a given topic.

Image credit: Google

4. The Knowledge Graph’s New Layer

There was an underlying theme throughout all of the announcements today: Understand what the user is looking for. After all, for quite some time now, that's been Google's axiom behind algorithm changes and new "smart" features that it's introduced in other products, like Gmail.

One way Google will further enhance its understanding of user queries -- to add more context to them, make them more relevant, and help the user learn more about a given topic down to the very detail of what she wants to know -- is by adding another layer to its Knowledge Graph: the Topic Layer.

The Google Knowledge Graph is essentially the technology used by the company to make these features "smart" -- in its own words, to make "connections between people, places, things and facts about them." That's how it knows to suggest topics like "restaurants in Los Angeles" if, say, you're also searching for flights there.

The Topic Layer adds another level of understanding the many nuances of a given topic, in part by "analyzing all the content that exists on the web for a given topic and develops hundreds and thousands of subtopics."

Of course, what Google doesn't say in its announcements outright is that much of this analysis done on behalf of a given user is based on that person's previous Search behavior -- what she's looked for in the past, travel she's booked, and content or pages she's repeatedly viewed.

In fact, with the plethora of new options to save content for later, it's fair to predict that Google will use that archive to help inform its search results.

It's not a new concept, but in this particular case -- it lends itself to the search engine's giant credo of, in a few words, really figuring us out.

Featured image credit: Google

![What Does a World With Zero Search Results Looks Like? [New Data]](https://blog.hubspot.com/hubfs/google-zero-search-results-impact.jpg)