If you pretend there's not a problem, does it actually go away?

Of course not. But the way many businesses treat customer satisfaction and customer success, it seems like that's common wisdom.

Measuring customer satisfaction allows you to diagnose potential problems, both at the individual and aggregate level. More importantly, it allows you to improve over time.

So, how do you gauge customer satisfaction? It all comes down to customer satisfaction surveys. In this post, we'll discuss what you need to know about designing productive customer satisfaction surveys, and to help you find the exact information you're looking for, here's a table of contents to guide you through it.

- What is a customer satisfaction survey?

- Why are customer satisfaction surveys important?

- Customer Satisfaction Survey Examples and Templates

- Customer Satisfaction Question Types and Survey Design

- When should you send customer satisfaction surveys?

- Customer Satisfaction Survey Best Practices

What are customer satisfaction surveys?

Customer satisfaction surveys measure a business’ customer satisfaction score, or CSAT, which is a basic measure of how happy or unhappy the customer was with an experience with a product or service, or with specific interaction with the customer service team.

.webp)

5 Free Customer Satisfaction Survey Templates

Easily measure customer satisfaction and begin to improve your customer experience.

- Net Promoter Score

- CSAT Score

- Customer Effort Score

- And more!

In other words, customer satisfaction surveys are used to gauge how your customers feel about your company or a given experience with your company. These surveys can come in many different forms, and you can use these surveys to segment customers based on satisfaction scores, measure relative customer satisfaction scores over time, or find insights for customer experience improvements.

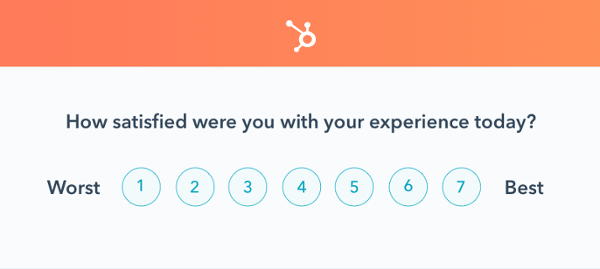

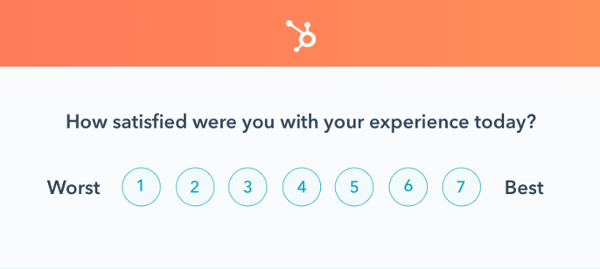

Here's a basic example of what a customer feedback survey might look like in your email inbox or within an app:

Why Customer Satisfaction Surveys Are Important

New companies are starting up every day, and competition is in abundance. One of the differentiating factors is what your consumers think about you. Big companies like Apple are thriving on considering their customers’ needs, and adding new and requested innovative features to their products.

Customers share good experiences with an average of 9 people and poor experiences with about 16 (nearly two times more) people — so you must figure out customer issues and try your best to solve them before they go viral on Yelp or social media.

There are so many benefits of asking for feedback on customer satisfaction:

1. Customer feedback provides insights to improve the product and overall customer experience.

It's imperative to find out what your customers think about your product because they can tell you what it needs for improvement.

We asked one of HubSpot’s Market Research managers, Amy Maret, to give her thoughts on the importance of survey feedback, as she stated, “Conducting regular customer satisfaction surveys allow businesses to keep a finger on the pulse of their most important relationship - their relationship with their customers! We see over and over that having an exceptional customer experience is absolutely critical to a business’ success, so being able to see in real-time how your customers are feeling, diagnose potential issues, and act quickly as soon as satisfaction starts trending down, is a huge advantage to any organization.”

Satisfaction surveys provide valuable information that can lead to product innovation upon analysis. Listening to the customer keeps them happy and improves their experience as they continue to do business with you.

2. Customer feedback can improve customer retention.

If your customer is unhappy, you can listen to them, work toward making the product more customer-friendly, and develop a deeper bond with them.

Maret continues on to say, “Customer satisfaction surveys can (and should) go beyond just measuring the KPI of satisfaction to identifying the factors that have the biggest impact on customer satisfaction. That way, as soon as the business sees unsatisfied customers in their survey, they can go deeper into what exactly is causing that dissatisfaction, and address those problems directly - which we know from our research leads directly to happier customers that are less likely to churn.”

In situations where a customer faces a problem with your product and gets it solved instantly, the customer becomes more loyal to your brand and is likely to stick around for long.

3. Customer feedback identifies happy customers who can become advocates.

When you offer customers an experience that exceeds their expectations, you increase your chances of gaining customer advocates.

When you receive feedback from delighted customers, you can use their satisfaction and implement it into part of your marketing strategy and incentivize them to spread more good word of your business to those around them or online.

After all, customers are likely to recommend or refer your product/service to their friends or relatives, and this is a great way to stand out from your competition. Referrals are a free and effective way of marketing thanks to word of mouth, and according to the Wharton School of Business, referred customers cost less to acquire, too.

4. Customer feedback helps inform decisions.

Thanks to customer feedback, you get tangible data to make major decisions. These decisions are not based on your hunches, as you can gather insights into how your customers feel. You should use your customers' opinions to guide your product's future.

But if you don't measure customer satisfaction at all, you'll never know these things.

You'll be not-so blissfully ignorant, and you'll risk these customers running to their friends and talking about what a wreck your customer experience is.

To avoid that type of publicity, let's dive into some specific survey examples and templates to get actionable customer feedback to improve your business.

Customer Satisfaction Survey Examples & Templates

Customer satisfaction surveys come in a few common forms, usually executed using a popular "one question" response scale methodology like:

- Net Promoter Score® (NPS)

- Customer Satisfaction Score (CSAT)

- Customer Effort Score (CES)

Each of these customer satisfaction survey methodologies measures something slightly different, so it's important to consider the specifics if you hope to use this data wisely.

Net Promoter Score

Net Promoter Score is a popular survey methodology, especially for those in the technology space.

It's rare to see a company now that doesn't use the famous question: "How likely is it that you would recommend this company to a friend or colleague?"

Ask your customers this question with HubSpot's customer feedback tool.

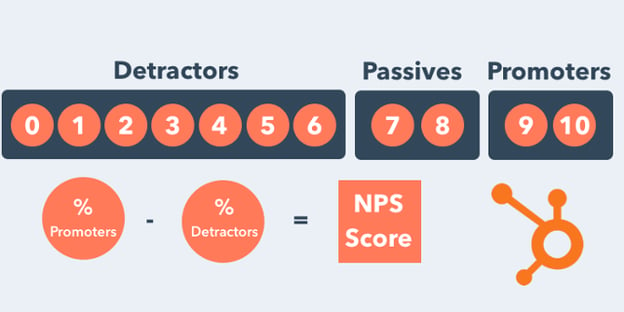

While this measures customer satisfaction to an extent, it's more aimed at measuring customer loyalty and referral potential. The way NPS is calculated, you end up with an aggregate score (e.g. an NPS of 38), but you can also easily segment your responses into three categories: detractors, passives, and promoters.

You calculate your Net Promoter Score by subtracting the percentage of Detractors from the percentage of Promoters.

In this way, NPS is useful both for aggregate measurement and improvement (e.g. we went from an NPS of 24 to 38 this year), but it's also great for segmenting customers based on their scores.

You can use that knowledge to inform customer marketing campaigns, expedite service to detractors, work on marginally improving the experience of passives, etc.

.webp)

5 Free Customer Satisfaction Survey Templates

Easily measure customer satisfaction and begin to improve your customer experience.

- Net Promoter Score

- CSAT Score

- Customer Effort Score

- And more!

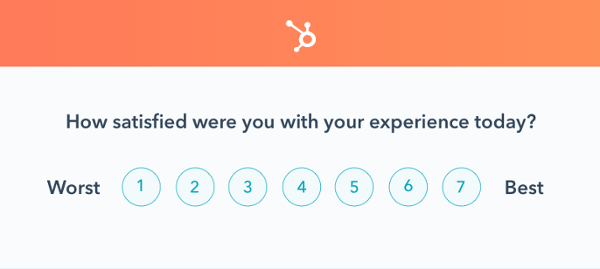

Customer Satisfaction Score (CSAT)

Customer Satisfaction Score (CSAT) is the most straightforward of the customer satisfaction survey methodologies. It's right there in the name; it measures customer satisfaction, straight up.

Usually, this is with a question such as "how satisfied were you with your experience," and a corresponding survey scale, which can be 1 – 3, 1 – 5, or 1 – 10.

There isn't a universal best practice as to which survey scale to use.

Ask your customers this question with HubSpot's customer feedback tool.

There's some evidence that the ease of the experience is a better indicator of customer loyalty than simple satisfaction. Therefore, the Customer Effort Score (CES) has become very popular recently.

Customer Effort Score (CES)

Instead of asking how satisfied the customer was, you ask them to gauge the ease of their experience.

.webp?width=600&height=269&name=How%20to%20Design%20Customer%20Satisfaction%20Surveys%20That%20Get%20Results%20%5B+Templates%5D-May-27-2022-08-19-52-71-PM%20(1).webp)

Ask your customers this question with HubSpot's customer feedback tool.

You're still measuring satisfaction, but in this way, you're gauging effort (the assumption being that the easier it is to complete a task, the better the experience). As it turns out, making an experience a low-effort one is one of the greatest ways to reduce frustration and disloyalty.

Customer Satisfaction Question Types & Survey Design

Survey design is important, and forgetting to prioritize it is probably one of the biggest mistakes people make when conducting customer satisfaction surveys.

Just because you're not writing a blog post or an eye-catching infographic doesn't mean your survey still shouldn't be engaging, relevant, and impactful. If the design is wrong, the data won't be useful to answer your questions about your customers.

Without diving too deeply into the esoteric world of advanced survey creation and statistical analysis, know this: How you pose the question affects the data you'll get in return.

There are a few different types of customer satisfaction survey questions.

I'll go through a few of them here and include some pros, cons, and tips for using them.

1. Binary Scale Questions

The first type of survey question is a simple binary distinction:

- Was your experience satisfying?

- Did our product meet expectations?

- Did this article provide the answer you were seeking?

- Did you find what you were looking for?

The answer options for all of these are dichotomous: yes/no, thumbs up/thumbs down, etc.

The benefit of this is its simplicity. In addition, most people tend to lengthen survey response scales to find deltas that may not mean that much. As Jared Spool, founder of UIE, said in a talk, "Anytime you're enlarging the scale to see higher-resolution data it's probably a flag that the data means nothing."

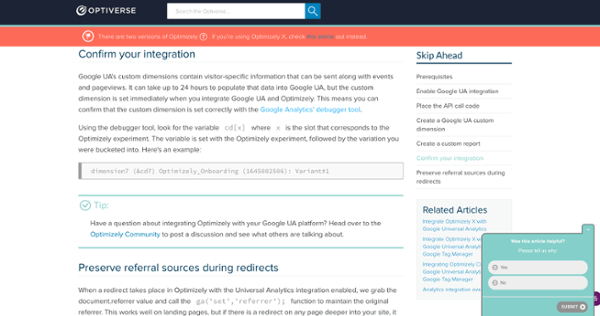

This is also a great question for something like a knowledge base, where a binary variable helps you optimize page content. Take, for example, Optimizely's knowledge base and their use of this question:

When you're running an A/B test on an ecommerce site, you have a binary variable of conversion: you converted or you didn't. We would often like to experiment similarly on knowledge base pages, but what's the metric? Time on page? Who knows, but if you do a binary scale survey at the end, you can quite easily run a controlled experiment with the same statistical underpinnings as an ecommerce conversion rate A/B test.

Two cons with binary questions:

- You lack nuance (which in some circumstances could be a benefit).

- You may induce survey fatigue on longer surveys with many questions.

If it's a long survey with many questions, customers can tire out and lean towards positive answers (this isn't a problem when you just have one or two questions, of course).

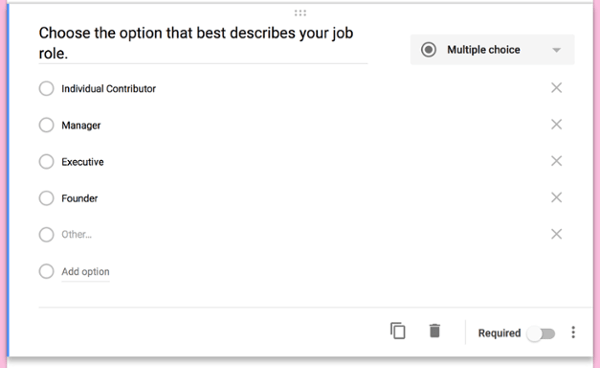

2. Multiple-Choice Questions

Multiple-choice questions have three or more mutually exclusive options.

These tend to be used to collect categorical variables, things like names and labels.

In data analysis, these can be incredibly useful to chop up your data and segment based on categorical variables.

For example, in the context of customer satisfaction surveys, you could ask them what their job title is or what their business industry is, and then when you're analyzing the data, you can compare the customer satisfaction scores of various job titles or industries.

.webp)

5 Free Customer Satisfaction Survey Templates

Easily measure customer satisfaction and begin to improve your customer experience.

- Net Promoter Score

- CSAT Score

- Customer Effort Score

- And more!

When proposing multiple choice questions on a survey, keep in mind your goals and what you'll do with the data.

If you have a ton of multiple-choice questions, you can induce survey fatigue which will skew your data, so keep it to questions you believe have important merit.

3. Scale Questions

Almost all popular satisfaction surveys are based on scale questions. For example, the CSAT score asks, “how satisfied with your experience," and you may get to rate the experience on a scale of 1-5 (a Likert scale).

The survey scale could be composed of numbers or you could use labels, such as “strongly disagree, disagree, neutral, agree, and strongly agree."

There are many pros to using scale questions.

- It's an industry standard and your customers will completely understand what to do when presented with the question.

- You can very easily segment your data to make decisions based on individual survey responses.

- You can easily measure data longitudinally to improve your aggregate score over time.

There's only one real disadvantage in my book: There's no qualitative insight. Therefore, you're left guessing why someone gave you a two or a seven.

Pro Tip: Couple survey scale questions with open-ended feedback questions to get the best of both worlds.

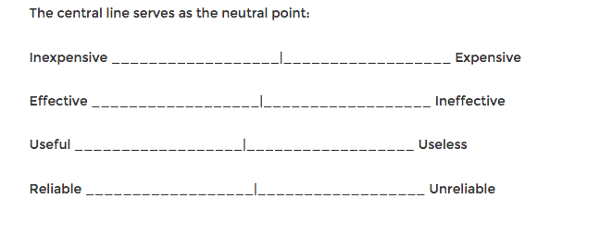

4. Semantic Differential

Semantic differential scales are based on binary statements but you're allowed to choose a gradation of that score.

So you don't have to pick just one or the other, you can choose a place between the two poles that reflects your experience accurately.

These have a similar use case to the survey response scale, but interestingly enough, if you analyze semantic differential scales they often break into two factors: positive and negative. So, they give you very similar answers to binary scales.

5. Open-Ended Questions

As I mentioned, the above survey questions don't allow for qualitative insights. They don't get at the “why" of an experience, only the “what."

Qualitative customer satisfaction feedback is important. Our own Market Research manager, Amy Maret continues on to say, “Qualitative questions are great for allowing customers to tell you the real ‘why’ behind their satisfaction, without you having to make assumptions about what matters to them. When you want to dig deeper into motivations and underlying factors, it is helpful to hear from customers in their own words. But be careful - too many open-ended questions in one survey can cause respondent fatigue - potentially frustrating your customers and damaging your data quality.“

Open-ended questions help identify customers' value propositions and businesses learn about things most important to the customer — which you won't glean from a numerical or multiple-choice survey.

It's easier to skew qualitative data and cherry-pick insights than you'd think, though, so be mindful of personal bias when you start deciding which questions to ask.

And getting qualitative responses helps you close the loop here, too. Instead of simply reaching out in ignorance and concern about a low satisfaction score, if you ask (and receive) a qualitative survey question, you can respond with a specific fix down the line.

When Should You Send Customer Satisfaction Surveys?

- As soon as possible after an interaction with customer support

- After some time has passed after a customer's initial purchase (the length of time will vary depending on your product or service)

- At different stages of the customer lifecycle to measure how satisfaction evolves throughout the customer journey

When to send your customer satisfaction surveys is another important question to consider.

When you pop the question also determines the quality of the data. While there are different strategies for conducting these surveys, ask enough experts and you'll hear this common mistake: Most companies ask too late.

Companies might be tempted to use "the autopsy approach" to customer satisfaction: waiting until an event is over to figure out what went wrong with a customer. Instead, customers should be asked questions while their feedback could still have an impact.

Ideally, you'll deploy customer satisfaction surveys at different times to get different views of the customer experience at different life cycle stages.

So, your first directive is to align your survey points with points of value that you'd like to measure in the customer experience. The more touchpoints you measure, the more granular your picture of the customer experience can be.

When to send your survey also depends on what type of survey it is.

Specifically, in regards to CSAT surveys, we recommend sending them as soon as possible after an interaction with customer support to capture the experience when it's still fresh.

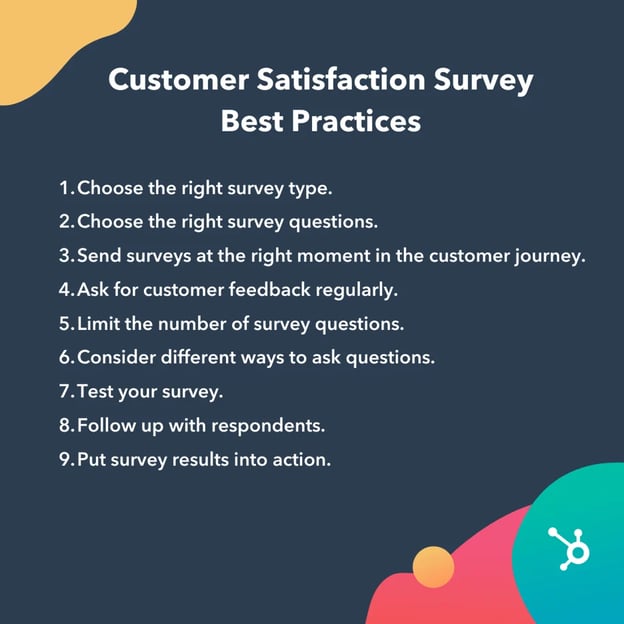

Customer Satisfaction Survey Best Practices

- Choose the right survey type.

- Choose the right survey questions.

- Send surveys at the right moment in the customer journey.

- Ask for customer feedback regularly.

- Limit the number of survey questions.

- Consider different ways to ask questions.

- Test your survey.

- Follow up with respondents.

- Put survey results into action.

.webp)

5 Free Customer Satisfaction Survey Templates

Easily measure customer satisfaction and begin to improve your customer experience.

- Net Promoter Score

- CSAT Score

- Customer Effort Score

- And more!

1. Choose the right survey type.

Before you start collecting customer feedback, it's important to pick a survey type that best suits your team's goals. Think about the information you're trying to obtain and how you'd like to capture it. Are you looking for quantitative data? Or, qualitative feedback?

If you're looking for results that are easy to sort and can highlight major trends at a glance, then you may want to consider an NPS or CSAT survey. But, if you're looking for more descriptive information outlining a customer's experience, then you should use a CES survey.

2. Choose the right survey questions.

Another key to obtaining the feedback you're looking for is picking the right survey questions. For this step, take your time and make thoughtful decisions because the type of questions you include will play a major role in the quality of feedback you receive.

Additionally, don't be afraid to use multiple question types within the same survey. Just make sure each type is grouped together so that the experience is more delightful for the respondent.

3. Send surveys at the right moment in the customer journey.

With surveys, timing is everything. If you deploy your survey at the wrong moment, you're not going to see successful results.

This means you should isolate the most ideal touchpoints in the customer journey to ask people for feedback. Typically, these are times when your company has completed an interaction with the customer and has failed or succeeded to provide the need they're looking for. For example, after a support agent closes a ticket with a customer is a great time to send a survey.

4. Ask for customer feedback regularly.

Customers are smart — like really smart. And, if you only send your surveys out after poor interactions, they'll be reluctant to fill them out. That's because they know that you're only reaching out to keep their business, and not because you care about their satisfaction.

Instead, you should collect feedback regularly to show that you're constantly trying to improve customer experience. This demonstrates a long-term commitment to customer satisfaction and building rapport with your customer base. Plus, this will give you more diverse feedback that's not solely positive or negative.

5. Limit the number of survey questions.

If you're new to surveys, determining the right length can be tricky for some businesses. Asking too many questions will cause customers to abandon your survey, but not asking enough questions spoils an opportunity to obtain information. Finding the right balance will optimize your survey's completion rate.

While there's no universal standard for survey length, most research suggests that the ideal length should be between 10 and 20 minutes. If it takes longer than 20 minutes to complete, participants will lose interest and your abandonment rate will start to increase.

6. Consider different ways to ask questions.

When you're coming up with survey questions, pay attention to how you frame them. The language you use will impact how participants answer your prompt. If it's biased or encouraging them to answer in a certain way, this will skew your survey results. If you're not sure if your survey is biased, have a few employees or peers take it and ask for their feedback.

7. Test your survey.

Before you deploy your survey, you should test it with your target audience. Instead of sending it to every customer at once, send it to a small group and see what type of results you get. Follow up with these customers as well and ask for their feedback on ways to improve the survey experience. Once you feel comfortable that you've created an effective survey, then you should send it to the rest of your customer base.

8. Follow up with respondents.

Now that you've got insights into your customer satisfaction levels, it's important to close the loop and follow up with customers in a meaningful way. Why let the data lie dormant when there are so many proactive efforts you can take here?

It's important to follow up with survey respondents. Closing the feedback loop with valuable customers who complete your satisfaction survey is simultaneously the most important and oftentimes most-ignored step in a successful customer satisfaction measurement campaign.

Making sure your team acknowledges and thanks anyone that completed the survey is critical to ensuring that customers will continue to provide you feedback — because it's about building trust and showing them value.

You can't always pivot and deliver on every piece of feedback that comes through — especially since some customer feedback just might not be of great value. But you can address every piece of feedback that comes through in some way — because responding, even if what the customer is requesting is not something you will do, is always better than no response at all.

9. Put survey results into action.

As with any form of data collection, one of the biggest mistakes is putting all that effort into data collection and analysis, but then coming up short when it comes to action. But that's why we collect data: to inform decisions.

How you act on your customer satisfaction data will vary according to the company and the situation (as well as resources available and many other variables), but it's important to have a plan of action. Ask yourself, "If I receive X feedback, what will I do with that information?"

Just asking this question will put you on a trajectory to improve your customer experience, as well as put you on a continuous customer feedback loop of better customer insights and actionable takeaways.

Results Begin with a Survey

We hope this post empowers you to take action and ask customers about their experiences with your business. If you garner enough feedback, you can turn those critiques and innovate your product or service to be even more spectacular.

.webp)

5 Free Customer Satisfaction Survey Templates

Easily measure customer satisfaction and begin to improve your customer experience.

- Net Promoter Score

- CSAT Score

- Customer Effort Score

- And more!

Net Promoter, Net Promoter System, Net Promoter Score, NPS and the NPS-related emoticons are registered trademarks of Bain & Company, Inc., Fred Reichheld and Satmetrix Systems, Inc.

Editor’s Note: This post was originally published in February 2020 and has been updated for comprehensiveness.

![→ Free Download: 5 Customer Survey Templates [Access Now]](https://no-cache.hubspot.com/cta/default/53/9d36416b-3b0d-470c-a707-269296bb8683.png)

.webp?width=512&height=512&name=How%20to%20Design%20Customer%20Satisfaction%20Surveys%20That%20Get%20Results%20%5B+Templates%5D-May-27-2022-08-19-53-78-PM%20(1).webp)

![23 Excellent Customer Satisfaction Survey Examples [+ Templates]](https://blog.hubspot.com/hubfs/customer-satisfaction-survey-example_0.webp)

![After Sales Service Strategy: What It Is & Why It's Important [+Examples]](https://blog.hubspot.com/hubfs/after-sales-service.jpg)